Imagine, if you will, a seasoned explorer, compass in hand, venturing into uncharted territory. Our intrepid adventurer believes they’re charting a course to the Eternal Apple Tree of Van Diemen’s Land, meticulously mapping every twist and turn. Yet, unbeknownst to them, their compass is subtly, almost imperceptibly, miscalibrated. Each step, though taken with the utmost precision, drifts them further and further from their true objective. They might discover something valuable, sure, perhaps even a fascinating new species of, say, a bioluminescent mushroom (yes, these are real!). But it won’t be the Eternal Apple Tree, will it?

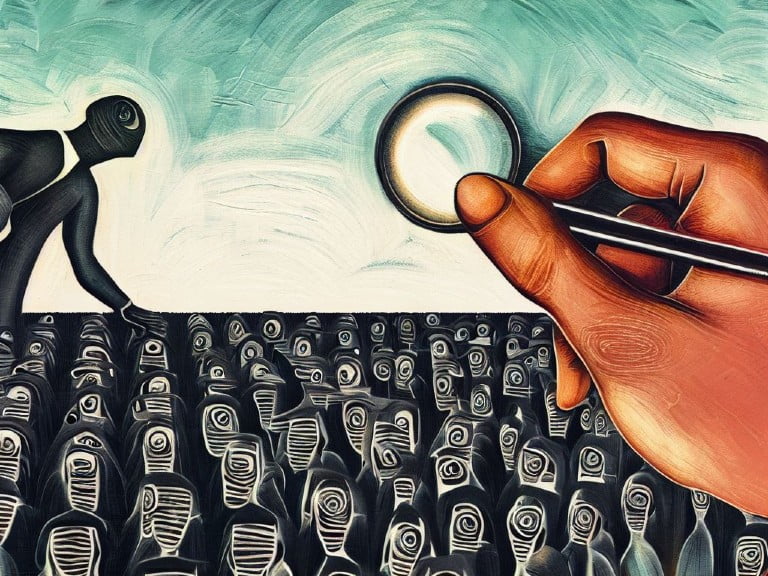

This, my friends, is often the insidious, quiet menace lurking in the shadows of many a Conversion Rate Optimisation (CRO) and User Experience (UX) testing regimen. We believe we’re measuring the impact of that snazzy new call-to-action button, or perhaps the subtle reordering of content on a product page. We meticulously craft our hypotheses, define our metrics, and then, with bated breath, launch our experiments, eagerly awaiting the glorious lift in conversions. But here’s the rub, the very crux of our discussion today: are we truly testing what we think we’re testing? Or, like our unwitting explorer, are we simply measuring the wrong thing (getting distracted by these beauties), albeit with scientific rigor? This, dear reader, is the often-overlooked, yet profoundly impactful, realm of construct validity.

The Elephant in the Server Room: Understanding Construct Validity

Let’s cut to the chase, shall we? Construct validity, in its most digestible form, asks a rather fundamental question: are you measuring what you think you’re measuring? It’s about the fidelity of your operationalisation. Think about it: when you’re running a CRO test, you’re not just swapping out a button colour. You’re typically trying to influence something far more abstract, something like “user engagement”, “perceived trustworthiness”, or “decision-making friction”. These aren’t tangible, easily quantifiable entities like the number of clicks. No, they are psychological constructs, much like “intelligence” or “happiness” in a scientific research context. And boy, oh boy, does this open up a Pandora’s box of potential misinterpretations if we’re not careful.

Many a well-intentioned CRO practitioner or analyst, myself included in my earlier, less enlightened (though still equally handsome then as today) days, tends to jump straight into A/B testing with a focus on metrics like click-through rates, conversion rates, or engagement rates. These are undeniably important, don’t get me wrong. They are the visible tip of the iceberg. But what lies beneath? What underlying psychological processes, what cognitive shifts, are we attempting to capture with these surface-level indicators?

If our test, for instance, aims to reduce “cognitive load” on a complex form, and our primary metric is form completion rate, are we truly measuring the reduction of cognitive load? Or are we simply measuring whether users eventually manage to slog through it, perhaps out of sheer stubbornness or desperate need? The distinction is crucial, isn’t it? It’s like trying to measure a guinea pig’s happiness solely by how many pea flakes it consumes. Sure, pea flake consumption might correlate with happiness, but it doesn’t define happiness. A happy guinea pig might just prefer a good nap, you know?

The literature on construct validity, particularly in psychology and educational measurement, is vast and, frankly, a bit daunting at times. But we don’t need a PhD in psychometrics to grasp its core tenets. Broadly speaking, construct validity encompasses several facets.

There’s convergent validity, which basically asks if your measure correlates highly with other measures that are theoretically supposed to measure the same construct. Then there’s discriminant validity (or divergent validity), which, conversely, asks if your measure doesn’t correlate with measures of theoretically different constructs. Imagine measuring “user friendliness” and it correlates highly with “website speed.” Are these truly distinct? Perhaps, but we need to be clear. If it also correlates highly with, say, the number of blue pixels on the page, then we might have a problem. That’s a silly example, granted, but it illustrates the point.

And then there’s face validity, which is the most superficial, asking if the measure appears to be measuring what it’s supposed to. It’s the “sniff test,” if you will. And while it’s a good starting point, it’s far from sufficient. Just because a button looks like it should increase conversions doesn’t mean it actually does so for the reasons we believe. The guinea pigs on what is objectively the best channel on YouTube often look like they’re contemplating the mysteries of the universe while munching on hay, but I assure you, it’s mostly just chewing.

Finally, content validity refers to how well the measure represents all facets of a given construct. If “user engagement” is your construct, does your test only measure clicks, or does it also consider time on page, scroll depth, interaction with dynamic elements, and perhaps even qualitative feedback? If not, you might be missing a significant chunk of the pie.

Operationalising Abstraction: The Devil in the Details

So, how do we operationalise these abstract constructs in a way that truly serves our CRO and UX testing goals? This, my friends, is where the rubber meets the road, and where many a well-intentioned experiment goes awry. Operationalisation is the process of defining how you will measure a concept that isn’t directly observable. For example, if your abstract construct is “website trustworthiness”, how do you translate that into measurable variables?

Many companies, in their haste to generate results, will define “trustworthiness” simply by the number of sales made through a particular page. But is that truly measuring trustworthiness, or is it measuring, perhaps, price sensitivity, product demand, or even brand loyalty that existed prior to the page experience? It’s a bit like assuming someone loves your cooking just because they ate everything on their plate. Maybe they were just really, really hungry!

This reminds me of a rather unfortunate incident where an agency partner, convinced their new live chat widget would build “trust”, found no measurable impact on conversions. Upon closer inspection, their target audience, it turned out, primarily consisted of professionals who really needed servicing over the phone, while the chat widget was primarily attracting their lowest value prospective clients. They were measuring their intent to build trust, not the actual impact on trust.

A more robust approach to operationalising “website trustworthiness” might involve a multi-faceted strategy. You could look at the presence and prominence of trust signals (security badges, customer testimonials, professional accreditations). You could also incorporate qualitative data through user interviews, asking direct questions about their perceptions of trustworthiness. Better yet, you could employ eye-tracking studies to see if users actually notice and dwell on these trust signals.

Furthermore, consider metrics like return visits (though this can be tricky to attribute solely to trustworthiness), or even social media mentions and sentiment analysis related to your brand’s perceived reliability. The point is, one single metric is rarely sufficient to capture the richness of an abstract construct. To me, it’s like trying to measure the performance of a concert pianist by only counting the number of notes played.

Let’s consider another common construct: “ease of use” or “usability.” Many will operationalise this by simply looking at task completion rates or time on task. While these are certainly indicators, they don’t tell the whole story. A user might complete a task, but with immense frustration and cognitive effort. They might have grumbled and muttered expletives under their breath the entire time (like me, every time I have to use a government website). Is that truly “easy to use”? Absolutely not! A more nuanced operationalisation of “ease of use” might incorporate:

- Subjective measures: Post-task questionnaires (e.g., System Usability Scale – SUS, though be careful not to overdo the surveys), or open-ended feedback.

- Behavioural observations: Number of errors made, number of clicks to complete a task, backtracking behaviour, or even subtle signs of frustration observed during user testing sessions (e.g., sighing, excessive scrolling, repeated attempts).

- Eye-tracking data: Where are users looking? Are they struggling to find information? Are their eye movements efficient or erratic?

- Heatmaps and scroll maps: Are users engaging with the elements we expect them to, or are they getting lost in a sea of irrelevant content?

The key here is triangulation – using multiple, distinct methods to measure the same construct. If all these different measures point to the same conclusion, you have much greater confidence in your construct validity. It’s like getting three different independent witnesses to describe the same event. If their stories align, you’re on much firmer ground than if you only heard one account. My personal observation? Most companies don’t dedicate nearly enough time to this initial, crucial step of really defining what it is they are trying to measure. They rush to the tools, you see, rather than the underlying purpose.

The Pitfalls of Proxy Metrics and Surrogates

A common trap in CRO and UX testing is the over-reliance on proxy metrics or surrogates. These are measures that are assumed to correlate with the true construct you’re interested in, but don’t directly measure it. For instance, using “page views” as a proxy for “user engagement”. While more page views might indicate higher engagement, they could also indicate users struggling to find information, or accidentally clicking on links. Similarly, “time on page” as a measure of “content quality.” A user might spend a long time on a page because the content is truly compelling, or they might be utterly confused and desperately trying to decipher poorly written copy. This is where construct validity screams for attention.

A good analogy might be measuring a chef’s skill solely by the number of dirty dishes they produce. While a busy chef might produce a lot of dirty dishes, a truly skilled chef might also be incredibly efficient and produce less waste. Conversely, a terrible chef might still produce a mountain of dishes from failed attempts. The number of dirty dishes is a proxy, not a direct measure of culinary prowess. We need to look deeper.

One classic example of a construct validity failure due to relying on a poor proxy metric can be found in the early days of online advertising. “Click-through rate” (CTR) was king when I was first starting out in paid media, almost 14 years ago. Everyone optimised for CTR, believing it was the ultimate indicator of ad effectiveness and user interest. But what happened? Advertisers started creating deceptive, “clickbaity” ads that generated high CTRs but led to terrible user experiences and ultimately, low conversion rates or high bounce rates once users landed on the actual page.

Why? Because “CTR” was a proxy for “interest” or “relevance”, but it failed to capture the nuances of genuine, valuable interest that led to desired outcomes. The advertisers were, in essence, measuring the wrong thing, even if the numbers looked good on paper. Fast forward to today, and I am finding many instances where AI-powered platforms are optimising for these superficially applicable outcomes (though for many of these platforms, they directly benefit from these interactions, so perhaps this is a different story).

Crafting Robust Hypotheses: Beyond the Obvious

The journey to robust testing methods truly begins with well-formed hypotheses. Too often, hypotheses are framed in a very superficial manner: “Reducing the number of fields in our contact form will increase conversions”. While this might be a perfectly valid testable statement, it’s not a strong scientific hypothesis because it lacks a clear link to the underlying construct you’re trying to influence. It’s an observation, not a causal inference rooted in a theoretical understanding of user behaviour.

A more construct-valid approach would involve framing hypotheses that explicitly link the proposed change to the underlying psychological construct, and then to the measurable outcome. For instance:

Weak Hypothesis:

“Changing the hero image to a smiling person will increase sign-ups”.

Stronger, Construct-Valid Hypothesis:

“We hypothesize that employing a hero image featuring a smiling, relatable human face will enhance perceived warmth and approachability (construct), thereby increasing user trust (another construct), which will, in turn, lead to a higher propensity to engage with the sign-up form (measurable behavior), resulting in a statistically significant increase in sign-ups”.

Do you see the difference? The stronger hypothesis explicitly articulates the why. It posits a theoretical mechanism. This forces you to think about how you would actually measure “perceived warmth and approachability” or “user trust,” not just the final sign-up number. This is where the integration of qualitative research becomes invaluable.

Before you even launch the A/B test, conduct some user interviews or surveys to gauge initial perceptions of warmth and approachability. Do users feel that warmth? If they don’t, your hypothesis about the image’s effect on warmth might be flawed, irrespective of the sign-up rates. This iterative process of refinement is critical.

This isn’t just academic navel-gazing; it has direct practical implications. If your green button does increase conversions, but your underlying hypothesis about its impact on “urgency” was wrong, you might misattribute the success. Perhaps your form with fewer fields does see a higher submission rate, but you have to ask if there are any down-stream impacts that might also be at play (perhaps your old form pre-qualified leads better, resulting in better quality leads).

If you don’t understand the true underlying mechanism, you can’t reliably replicate that success, nor can you generalise it to other contexts. It’s like that friend who won the meat pack and two bottles of wine at the bowls club raffle, who goes on to assume their lucky socks are the reason. They might keep wearing them, but the next time, they probably still lose (and, if they haven’t washed said socks, they will certainly be going without me next time).

According to a paper by Robert M. Groves and Mick P. Couper on online survey methodology, understanding the cognitive processes respondents use to answer questions is vital for ensuring construct validity in survey data. This principle extends directly to our CRO and UX testing; we need to understand the why behind user behaviour, not just the what.

Beyond the A/B Test: Integrating Qualitative Insights

For me, the single biggest oversight in many CRO programs is the failure to adequately integrate qualitative research before, during, and after A/B tests. Think of quantitative data (like A/B test results) as telling you what is happening, while qualitative data (like user interviews, usability tests, or open-ended survey responses) tells you why it’s happening. Without the “why,” you’re essentially flying blind.

Before you even design an A/B test for a specific hypothesis, conduct exploratory qualitative research. Talk to your users. Observe them interacting with your website. Ask them open-ended questions. What are their pain points? What are their motivations? What language do they use to describe their experience? This will help you identify the true constructs that are important to your users and inform your operationalisation.

For example, if you believe a cluttered navigation is leading to “user frustration,” don’t just redesign it and test for lower bounce rates. First, conduct usability tests where you observe users attempting to navigate, paying close attention to their verbalisations and body language. Do they express frustration? Do they sigh? Do they articulate difficulty finding what they need? This qualitative data helps validate your initial assumption about “user frustration” as a relevant construct.

During the test, consider implementing micro-surveys or feedback widgets on the live site, particularly for the losing or winning variations. Ask users about their immediate perceptions or feelings. If your hypothesis was about “clarity”, ask a quick question about how clear the content felt to them. This real-time feedback can provide invaluable insights into the construct you’re trying to measure. Seriously, though, this is an absolutely critical point that often gets missed. We’re so focused on the numbers post-test, we forget to ask the humans while they’re interacting with the experience.

After the test, if you have a clear winner, don’t just move on to the next test. Conduct follow-up qualitative research with users who experienced the winning (or losing) variation. Why did it resonate with them? What aspects did they find appealing? Did it indeed address the underlying construct you hypothesised?

This helps confirm or refine your understanding of why your test succeeded, providing deeper insights that can be applied to future optimisations, rather than just blindly replicating a successful element without understanding its root cause. It’s the difference between knowing what ingredients made a cake taste good and knowing why those ingredients, combined in that particular way, created that delicious flavour. The latter is far more valuable for a chef, wouldn’t you say?

A study published in the Journal of Marketing Research by Dana L. Alden, Wayne D. Hoyer, and Pamela G. Batra highlights the importance of understanding the cognitive and affective processes underlying consumer responses to advertising, stressing that without this deeper understanding, the effectiveness of an ad might be misattributed or not fully leveraged. This echoes the need for qualitative insights to bolster construct validity in CRO.

The Criterion Question: Are We Measuring the Right Outcome?

Beyond ensuring you’re measuring the right construct, there’s also the crucial aspect of criterion validity. This asks whether your measure is predictive of, or correlates with, a relevant external criterion. In CRO, our ultimate criterion is usually a business outcome: sales, sign-ups, lead generation, customer retention, etc.4\

But here’s the kicker: sometimes, we’re optimising for a proxy criterion, not the true business outcome. For example, if your business objective is “increase customer lifetime value (CLTV),” but you’re only optimising for “initial sign-ups,” you might be missing the mark. A design change might lead to more sign-ups, but if those new sign-ups churn quickly or never become high-value customers, have you truly achieved your business objective? This is where the alignment between your test metrics, your constructs, and your ultimate business goals becomes paramount. It’s like training a racehorse to be the fastest out of the gate, but completely neglecting its stamina for the rest of the race. What’s the point of a quick start if it can’t finish?

To address this, ensure your test metrics are clearly linked to your long-term business KPIs. If your goal is CLTV, consider running longer-term tests that track cohorts over time to see if early interventions truly impact later value. This often means embracing a slower, more deliberate testing cadence, something many organisations find challenging in the pursuit of quick wins. However, a series of quick wins that don’t move the needle on core business objectives are ultimately just vanity metrics. I guess this is why my dad goes so wild with pulling the dandelions out of his lawn; he could always mow over them and they’re gone… until they come back at least. Or he could do what he actually does: obsessively dig them out like he is evicting something that has personally wronged him!

Another aspect of criterion validity, particularly relevant in UX testing, is the distinction between process metrics and outcome metrics. Process metrics might include task completion time or number of clicks. Outcome metrics are the ultimate goals, like successful purchase or information acquisition. While optimising process metrics can be valuable, always ask if they truly lead to the desired outcome. A user might complete a task quickly, but if they feel negative about the experience or don’t return, then the “speed” wasn’t a truly valuable criterion.

The Role of Theory: Guiding Our Testing Endeavours

This entire discussion loops back to the importance of psychological theory in guiding our CRO and UX testing. We’re not just throwing spaghetti at the wall to see what sticks. We should be formulating our hypotheses based on established principles of human behaviour, cognitive psychology, and consumer decision-making.

For instance, if you’re testing the impact of scarcity on conversion, you’re leaning on Robert Cialdini’s principle of scarcity from his seminal work (and an absolute cracker of a read, if you ask me), Influence: The Psychology of Persuasion. If you’re testing the impact of social proof, you’re also drawing from his work. When you understand the underlying psychological principles, you can craft more nuanced tests and interpret your results with greater confidence. You can also anticipate potential pitfalls. For example, Cialdini also points out that scarcity can backfire if it’s perceived as manipulative. This theoretical understanding helps refine your construct of “scarcity” and how you operationalise it.

Consider the concept of “processing fluency“, which suggests that information that is easier to process is perceived as more trustworthy or accurate. If you’re testing a new font, a cleaner layout, or simpler language, your underlying construct might be “processing fluency.” Your hypothesis would then link the visual change to an increase in processing fluency, which then leads to greater perceived trustworthiness, and ultimately, a higher conversion rate.

How do you measure processing fluency? Perhaps through implicit measures like response times to certain questions, or through user feedback on the perceived “ease of understanding.” This is far more robust than just saying “we changed the font and conversions went up”. It tells you why it went up, and therefore helps you apply that learning elsewhere.

Remember, this isn’t about becoming academics or overcomplicating the core process for the sake of feeling fancy and smart; it’s about becoming better practitioners. It’s about elevating our craft from mere button-swapping to truly understanding and influencing human behaviour on our websites. It’s about moving from simply observing correlations to understanding causal mechanisms. This is the path to truly impactful CRO and UX work.

Avoiding the Clever Hans Effect: Unintended Influences

Ever heard of Clever Hans? He was a horse in early 20th-century Germany who seemingly could perform arithmetic. His owner would ask him to add or subtract, and Hans would tap his hoof the correct number of times. It was astounding! People travelled from far and wide to witness this intellectual marvel. Until a psychologist, Oskar Pfungst, figured it out. Hans wasn’t doing math; he was picking up on subtle, unconscious cues from his owner—tiny changes in posture or breathing that signalled when to stop tapping. The owner wasn’t intentionally cueing Hans, but the horse was responding to these unintended influences.

In CRO and UX testing, we often fall prey to a similar “Clever Hans effect”. Our tests might be influenced by factors we haven’t accounted for, inadvertently skewing our results and leading us to incorrect conclusions about the constructs we’re trying to measure. This is another facet of construct validity: ensuring that external or confounding variables aren’t contaminating your measurement of the intended construct.

For example, imagine you launch a test designed to increase “sense of urgency” on a product page by adding a countdown timer. You see a significant lift in conversions. Great, right? But what if, unbeknownst to you, the day you launched the test, a major competitor ran out of stock, driving their customers to your site? Perhaps there was a flash sale on another, complementary product on your own site that suddenly made your product more appealing. Or, perhaps your paid media platform (like Google Ads or Meta Ads) started serving your ads a little differently for whatever reason (like reaching a confidence level in its AI training). Your conversion lift might be due to these external factors, not the countdown timer’s impact on urgency. Your construct of “urgency” wasn’t what truly drove the results.

To mitigate the Clever Hans effect, we need to:

- Control for external factors: This means ensuring consistency in traffic sources, marketing campaigns, and even major news events during your test period. If something significant happens externally that could influence user behaviour, acknowledge it and consider its impact on your results.

- Segment and analyse: Look at your test results through different segments (e.g., new vs. returning users, different traffic sources). Do all segments show the same trend? If not, it could indicate an external influence.

- Run concurrent baseline tests: Sometimes, it’s beneficial to run a simple A/A test (two identical versions) alongside your A/B test. This can help you detect anomalies or widespread site issues that might be affecting all variations.

- Scrutinise the magnitude of change: If a small, seemingly minor change leads to an exceptionally large conversion lift, it might be a red flag. While “unicorns” do happen, often an unusually high lift suggests an underlying confounding variable.

My personal experience suggests that the temptation to attribute success solely to the tested element, ignoring broader market dynamics or concurrent campaigns, is very strong.

Ethical Considerations: The Unseen Construct

Beyond the purely methodological aspects, there’s an often-overlooked ethical dimension to construct validity in CRO and UX. Are we, in our pursuit of conversions, inadvertently manipulating users or exploiting their cognitive biases in ways that don’t genuinely serve their best interests? For instance, if you’re using dark patterns—UI elements designed to trick users into doing something they might not otherwise do—you might achieve a conversion lift. But are you truly building “trust” or “customer loyalty”? Or are you just temporarily exploiting a momentary lapse in attention?

While these dark patterns might appear to “work” in the short term by increasing a certain metric, they fundamentally undermine constructs like “transparency”, “user autonomy”, and “long-term customer satisfaction”. If your business relies on repeat purchases or sustained engagement, eroding these constructs through manipulative tactics will ultimately backfire. It’s like. Be sure to scrutinise your tests to make sure that you are working in the best interests of the user, not just what is easy for the user.

This means that our operationalisation of constructs needs to extend beyond immediate conversion metrics to consider long-term customer well-being and brand reputation. When measuring “customer satisfaction”, for example, we might include metrics like Net Promoter Score (NPS), customer service inquiries related to specific features, or even social media sentiment analysis. These metrics, while perhaps not directly impacting immediate conversions, contribute to a more holistic understanding of how our changes impact the true underlying construct of customer happiness and loyalty.

The European Union’s General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA), for instance, have forced companies to rethink how they gain “informed consent” online. This is a direct challenge to the construct validity of previous “consent” mechanisms, where often consent was implied rather than explicitly given. The legal framework is, in essence, demanding better construct validity for terms like “consent” and “privacy”.

Final Thoughts

The pursuit of conversion rate optimisation and exceptional user experience is, at its heart, a scientific endeavour. And like any scientific endeavour, its rigour and ultimate value hinge on the quality of its measurements. We must, therefore, become meticulous cartographers of user behaviour, ensuring that our compasses are perfectly calibrated, that our maps truly reflect the terrain we aim to explore. The often-silent, frequently forgotten guardian of this methodological integrity is construct validity.

It’s not enough to simply run A/B tests and then celebrate with a cup of tea and a muffin. We must dig deeper, ask harder questions, and challenge our assumptions. Are we truly measuring “trust”, “ease of use”, “urgency”, or whatever abstract construct we believe we’re influencing? Or are we merely observing superficial correlations, mistaking the shadow for the substance?

By investing time in clearly defining our theoretical constructs, operationalising them with multi-faceted measures, integrating rich qualitative insights, and ensuring our criteria truly align with long-term business objectives, we elevate our CRO and UX efforts from mere tactical tweaks to strategic, impactful interventions. We move beyond simply changing button colors to truly understanding the intricate dance between human cognition and digital interfaces.

This, folks, is the path to building digital experiences that not only convert but also genuinely resonate, foster loyalty, and deliver lasting value. It’s a challenging journey, sure, but one well worth undertaking. And honestly, it’s a lot more interesting than just staring at numbers all day, isn’t it?