Well, hello there, and welcome back, data warriors! So, you’ve been eyeballing your Google Analytics 4 (GA4) dashboard, feeling pretty chuffed about those user engagement numbers, huh? Hold up a second. Did you account for the bots? Yes, those sneaky, invisible bots that roam the digital landscape and, guess what, mess with your metrics. But hold your horses; not all bots are the villainous creatures we make them out to be.

Let’s set the stage: bot traffic, whether it’s your friendly neighborhood Spider-Man or the Green Goblin, can seriously distort your analytics. We’re talking Wonderwoman-level impacts on everything from engagement rates to average session duration. Can’t tell your bots from your real users? Don’t sweat it; we’re about to deep-dive into the good, the bad, and the ugly bots. Plus, we’re going to chat about how GA4 is both your shield and your Achilles heel in this story.

Picture this: you’ve got a killer ad campaign running on both Google and Meta Ads platforms, but your bot-inflated metrics make you think it’s a dud. Not to mention, your AI optimising your campaigns has become about as effective as a mashed potato, as it’s been trained on bots instead of humans. Before you can slap a teabag in a cup you’ve pulled the plug, not knowing you were actually onto a gold mine.

Sure, GA4 tries to keep the baddies out, but let’s face it, some slip through the cracks. So, stay with me as we explore this bot-ridden universe and figure out how to clean up your GA4 data like it’s spring-cleaning day. Let’s jump into the nuts and bots—oops, I mean bolts—of it all!

The Many Faces of Bots: Good, Bad, and Ugly

Ever click that “I’m not a robot” box and then wonder who you’re sharing the digital space with? Spoiler alert: you’re in a sea of bots! A whopping 47% of web traffic in 2022 was bot-driven, leaving us, the humans (if I might be so generous as to call myself that), as minorities in our own playground. Crazy, right? So what kind of bots are lurking around and should you be worried?

The old adage says that we must know our enemy to defeat our enemy, so let’s take a look at the different types of bots and why they are used; lets lift the vale.

Scraper Bots: The Content Kidnappers

Picture this: you spend weeks doing research and formulating some top-notch insights that nobody else is talking about. And then, out of nowhere, BAM! It’s been baked into LLM dataset or blatantly copied on someone else’s website or blog. As the AI space heats up—thanks, ChatGPT and Bing AI—more companies want unique content, and some are willing to steal yours to get ahead. Why should you care? First off, your SEO rankings can plummet faster than a lead balloon, but more importantly, your intellectual property is now in the Wild West of the internet, being paraded around as though it was crafted by someone else. Ouch.

Spam Bots: The Party Crashers

Imagine hosting a dinner party and a stranger barges in, yelling about SEO optimization. Welcome to the world of spam bots. They graffiti your site’s forums, comment sections, and any place where user engagement thrives, effectively derailing the conversation and driving real users crazy. The mess they leave behind? Well, it’s not just about turning dialogues into monologues. Site admins have to deal with this bot-litter while also fighting the SEO impact of spammy content. Your site’s credibility and user experience go for a toss.

Scalper Bots: Don’t Mess With ‘Em

These are the F1 drivers of the bot world—fast, cunning, and annoying for anyone not in the race (and a real problem during the height of the pandemic, as I am sure you can remeber). Imagine you’re dying to get front-row seats for your guineapig expo. Just as you’re about to click ‘Buy,’ Scalper bots swoop in like a digital tornado and snatch all the tickets.

No expo piggie merch for you, kiddo! What’s the fallout? Apart from you not getting to look at hundreds of adorabl guneapigs, this bot type frustrates real customers and turns your e-commerce site into a black market ticket hub. It’s a lose-lose, and you could even lose your brand’s good standing in the process.

Monitoring Bots: The Digital Watchdogs

But not all bots are made to wreak havoc. Let’s bring in the good guys, shall we? Monitoring bots are your site’s personal bodyguards. They’re the ones that text you at 3 a.m., but instead of saying, “You up?”, they’re saying, “Your server’s down!” They’re always on guard, ensuring that your website performs like a well-oiled machine. The upside? You can sleep easy, knowing these bots will alert you if your site is ever under attack or if your server decides to take an unplanned vacation. Trust me, in the breakneck world of digital, every second of downtime is a penny lost.

Backlink Checkers: Your Web’s Private Investigators

Who doesn’t like a little bit of gossip, especially when it’s about them? Backlink checkers are the private investigators of your website’s world, combing through the intricate webs of links and mentions, all while delivering this info in a neat package. Why is this so amazing? For one, it’s like having a cheat sheet for your site’s reputation. On the other hand, SEO wizards can use this data to conjure new linking strategies and elevate your site to Google’s first page. Nice!

Web Crawlers: Your Digital PR Agents

Last but absolutely not least are the Web crawlers. These bots are the unsung heroes of your digital existence. They meticulously map your site, helping search engines understand your content and serve it to the right people. Without them, your website would be as visible as a needle in a haystack. These bots boost your SEO by making sure you don’t get lost in the maze of the World Wide Web. No more screaming into the digital void, praying for a visitor. They make you relevant, and in the internet game, relevance is royalty.

The Flaws and Fortunes of Google Analytics 4

So you’ve made it this far and you’re still hungry for more on our robotic companions? Good on you! But just know, trying to catalog every single bot is like trying to count stars in the sky. They’re ever-growing and ever-changing, and by the time you’ve blinked, ten more have sprouted up. With tools like Automator for Mac and Power Automate for Windows, anyone can get into the bot-making business.

Now, where the plot thickens like a day-time soap opera: all these bots, whether angels or devils, can muck up your Google Analytics data. If your eyes just widened in disbelief, perhaps it’s time for a tea refill. But worry not, because Google Analytics 4 (GA4) has got some aces up its sleeves. It draws its filtering mojo from two main sources: Google’s own research and the IAB/ABC International Spiders and Bots List.

But let’s be real. GA4 isn’t the digital Fort Knox. Despite its shiny veneer, it’s known to let some bots slip through the cracks. And when it happens, it’s usually right when you can’t afford any mistakes, like in the middle of a BFCM campaign. So what’s the game plan when Google’s goalie misses the save? First, don’t panic. Sure, Google’s not perfect, but neither are most things in life.

What you can do is get proactive. Dive into your Google Analytics settings and start setting up some custom filters. It might not keep out all the riff-raff, but it’ll definitely reduce the noise.

How to Spot Bot Traffic in Google Analytics 4

First things first, you can’t fight what you can’t see, right? Identifying bot traffic in GA4 is like spotting a scam email—once you know the signs, they stick out like a sore thumb. The main idea is to hone in on the metrics that tell you what’s human and what’s just a hunk of code pretending to be.

Keep in mind that some bots can put on some pretty fancy disguises, so you might not catch them all with the bare eye, but getting a read on your the most obvious offenders is a great place to start.

Unveiling the Mask of Suspicious Sources

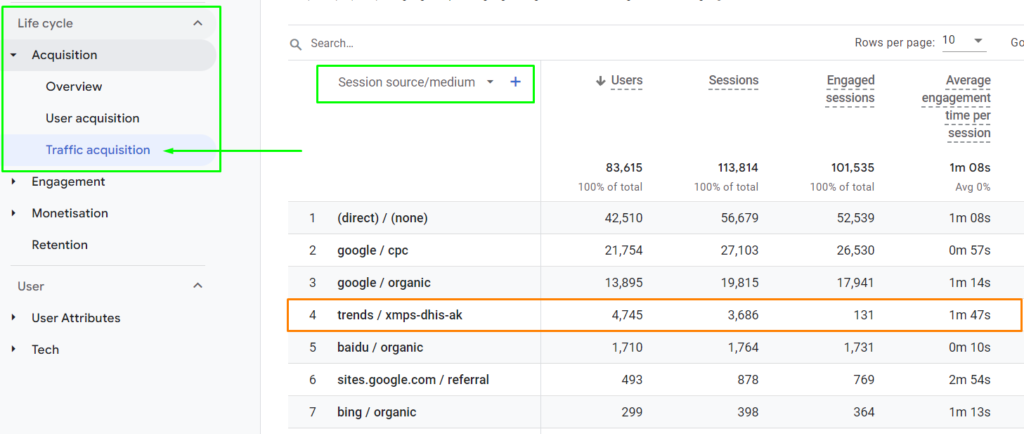

Now here’s a fun part: spotting bots that are blatant about their origins. Some of them don’t even try to hide it. Navigate to Reports > Acquisition > Traffic Acquisition and swap the primary dimension to Source/Medium.

If something stands out like a sore thumb—like an unusual source driving a lot of traffic with little to no engagement—you’ve likely found a bot or two. But remember, these are just the lazy bots; others are craftier and require a more in-depth investigation.

Conversion Peaks: The Double-Edged Sword

Ah, conversions—the light at the end of every marketer’s tunnel. But beware, not all that glitters is gold. If conversions are shooting up out of nowhere, don’t break out the bubbly just yet. Bots can mimic conversions, throwing your data for a loop. Scrutinize the quality and timing of these conversions to avoid getting duped, particularly if your conversion actions are tied to thank you pages or unvalidated interactions (such as button or link clicks).

Bounce Rates and Low Engagement: A Cautionary Tale

Now let’s chat about bounce rates. High bounces are not always bad; ‘Thank You’ pages are a classic example. But if you’re noticing high bounce rates on cornerstone content, you’ve got an issue. Take a look at the screenshot above again; do you see anything strange? Its hard to imagine that only 131 sessions out of a total of over 3 thousand were engaged.

Low engagement can often signify bot traffic. Use GA4’s built-in metrics to segment audiences based on engagement and sift through the data.

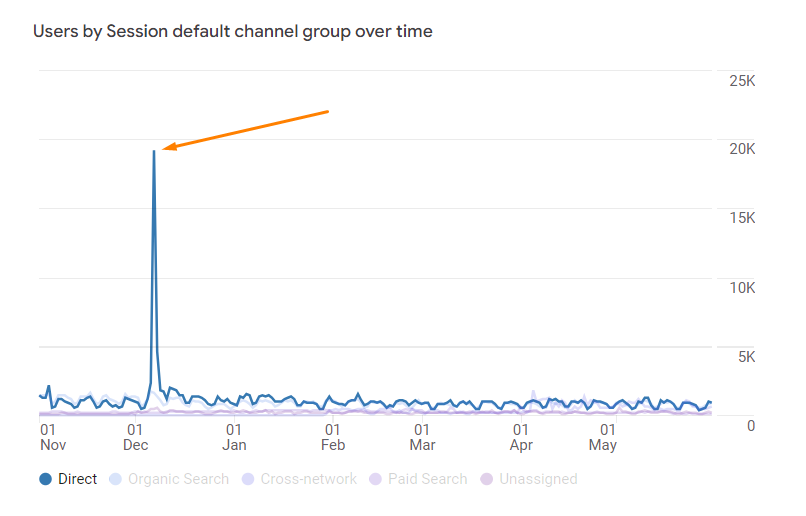

The Lowdown on Unassigned or Direct Traffic

The enigmatic ‘Direct’ or ‘Unassigned’ traffic. Google usually does a good job categorizing where your traffic comes from. Bots, however, like to live off the grid, showing up as ‘Direct’ or ‘Unassigned’, often because they are missing fields that are normally sent wen a legitimate user causes an event to fire.

Check these categories for abnormal spikes and then cross-reference them with other metrics like session duration and bounce rate.

The Geographic Red Flags: Not All That Glitters is Gold

Before we wrap up, let’s talk geography. Seeing a traffic surge from regions you’re not targeting? Hold the champagne! Navigate to Reports > User Attributes > Overview and give that geographic data a hard look. Bots often mimic users from various regions to throw you off track, but there are often some tell tale signs that give them away.

Check for near equivalent ratios of new users to total users, as these bots often come in wave and lack the ability to persist an identify between sessions. Also, look for very low engagement rates or large user counts but no conversions. Keep in mind that you are tying to develop a ‘fingerprint’ for your bot traffic, but don’t be too liberal otherwise you might exclude legitimate users.

Practical Measures: Your Defence Foundation

So you have dropped into the rabbit hole that is your GA4 data, and some bot-like patterns are emerging form your data. Like Alice (in Wonderland), you cant just call it a day and stay down there, you need to develop a gameplan. Bot management in GA4 is not just a task—it’s a full-fledged strategy. We’re talking about a world where bots evolve faster than you can say “tea for two”. Think of it as an ongoing battle where the rules of engagement change faster than TikTok trends. But you don’t have to fight this with your teacup and saucer. You have some serious tools in your arsenal.

Data Analytics and Machine Learning: The Cutting Edge

Let’s kick things off with the super cool, sci-fi-esque side of things—data analytics and machine learning (ML). Many people think that ML is only for the big players, but don’t disqualify yourself just yet, as you may be overlooking a solution that could be a gamechanger for your data.

Start off by identifying bot traffic through demographic and behavioral data because, let’s face it, bots behave differently than humans. Once you’ve got your data labeled, train a BigQuery ML classification model to distinguish between legit traffic and the bots. But don’t just set it and forget it—evaluate this model using statistical measures like precision, recall, and the F1 score to make sure your data is sharp as a tack.

After you’ve verified that it’s up to snuff, use it to make predictions about incoming traffic. And here’s the kicker: Use those predictions to take immediate action against bot traffic, whether that’s blocking, re-routing, or whatever else tickles your fancy. It’s like your own personal Minority Report, but for website traffic.

Adaptability is Key: Learn from Your Mistakes

Alright, so you’ve got your tech set up. But let’s be real; mistakes will happen. What sets the pros apart is how they handle the aftermath. No plan survives contact with the enemy, right? That’s where adaptability comes into play. Conducting a post-incident analysis isn’t just smart; it’s essential.

Ask the hard questions: Why did this happen? How did it happen? And most importantly, how can we make sure it doesn’t happen again? Revisit your strategies, tweak them, and always be prepared to pivot. And, most importantly, don’t forget to document and disseminate—if you don’t, you will find yourself living in an unescapable Groundhog Day loop.

Robots.txt and IP Filtering: The Good, the Bad, and the Ugly

Moving on to something a bit more classic—Robots.txt files and IP filtering. These might be old school, but they’re not going out of fashion anytime soon. Robots.txt files are like the bouncers of your website, telling bots where they can and can’t go. And, as we saw above, not all bots are law-abiding citizens. Some just ignore your Robots.txt and waltz right in.

IP filtering takes it up a notch. It’s like having a blacklist for your club, only letting in the cool kids. The problem? IP addresses change, and you’ll have to keep that list updated. But don’t 404 on m just yet, there is a bunch of technology that can help you manage that without spending hours sifting though, such as Plugins and Apps for you website.

Proactive Security Measures: Your First Line of Defense

And finally, we circle back to the basics—proactive security measures. Before bots even get a chance to mess with your data, you should have barriers up, like a Web Application Firewall (WAF). Think of this as your moat, filled with digital alligators. Security plugins and apps are like your castle walls—another layer of defense. And let’s not forget CDNs, which act like the archers on your castle walls, distributing the traffic load and fending off potential DDoS attacks.

Final Thoughts

Phew! What a roller coaster! The world of bots is complex, expansive, and always evolving. It’s like chasing a shadow—you get close, and it’s already morphed into something else. GA4 does give us some peace of mind with its filtering mechanisms, but remember, it’s not the be-all and end-all. Think of it like antivirus software; it’ll catch a lot but might miss the latest trickster to join the gang. The trick is to stay proactive, keeping your eyes peeled for odd patterns and anomalies that scream “I’m a bot, catch me if you can!”

As I say, this is an ongoing battle, and one that you’ve got to be consistently engaged in to protect your digital turf. So, whether you’re a one-person army or have a dedicated data science team, don’t underestimate the power of vigilance and smart analytics practices. And yes, machine learning isn’t just for the tech giants. Even if you’re running a boutique online store, there’s affordable ML-based software that can help you filter out bot traffic like a pro.

Don’t let the bots make a fool out of you. Take control of your GA4 settings, set up those custom filters, and make your data as clean as the freshest Tasmanian water. After all, in the digital world, data integrity isn’t just a nicety; it’s a necessity. Until next time, keep your analytics clean and your decision-making sharp!